How To Scrape Paginated Data using Dumpling AI and ChatGPT in Make.com

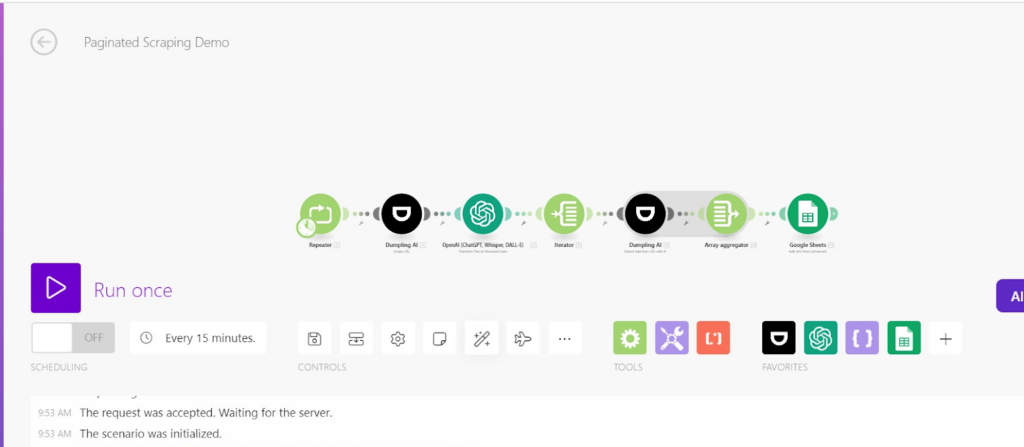

In this guide, we’ll walk through how to build a Make.com automation that scrapes multiple web pages, processes data using Dumpling AI, OpenAI, and stores results in Google Sheets. This process is perfect for collecting paginated data from websites and organizing it neatly.

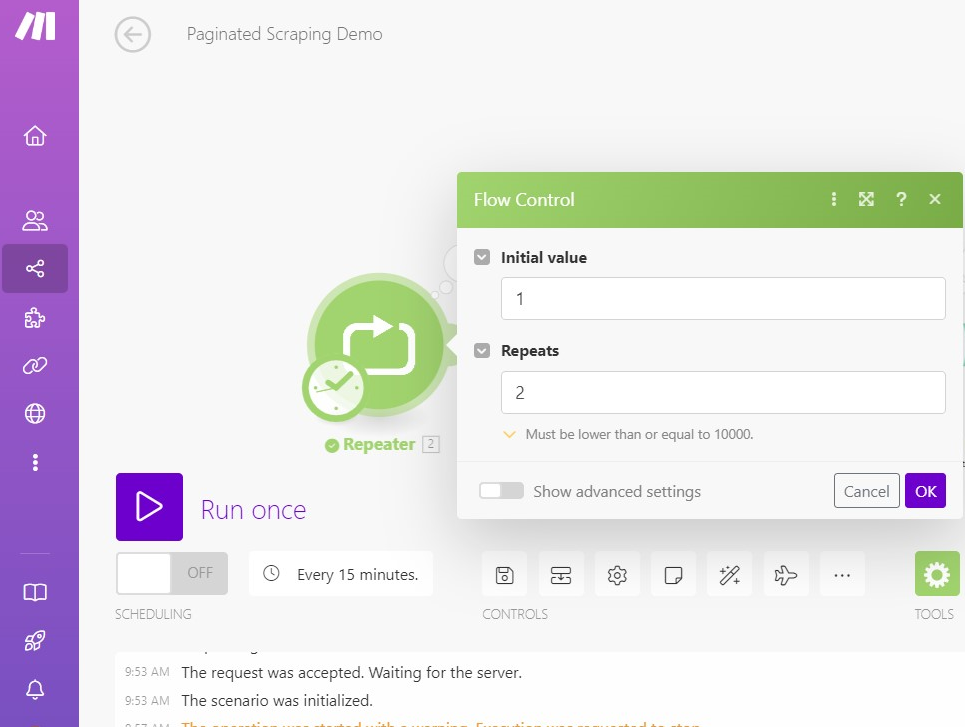

Step1. Setting Up the Repeater module: Automate Pagination

The Repeater module is used to automate scraping across multiple pages of a website. Instead of manually entering each page’s URL, the repeater generates the URLs dynamically by changing the page number.

Why We Use the Repeater

- Automates Pagination: Many websites split data across several pages. The repeater changes the page number automatically so you can scrape each page without manually updating the URL.

- Saves Time: It handles multiple pages in one go, reducing the time spent configuring each URL. For example, Here’s the URL we used:

The {{2.i}} is where the repeater increments the page number. On the first run, it scrapes page 1, then moves to page 2, and so on.

Repeater Settings

- Initial Value: Set to 1 (starting page).

- Repeats: Set the number of pages to scrape.

- Step: Usually set to 1, so it moves from page 1 to page 2, etc.

By using the repeater, we can scrape all paginated data automatically, saving time and ensuring complete coverage.

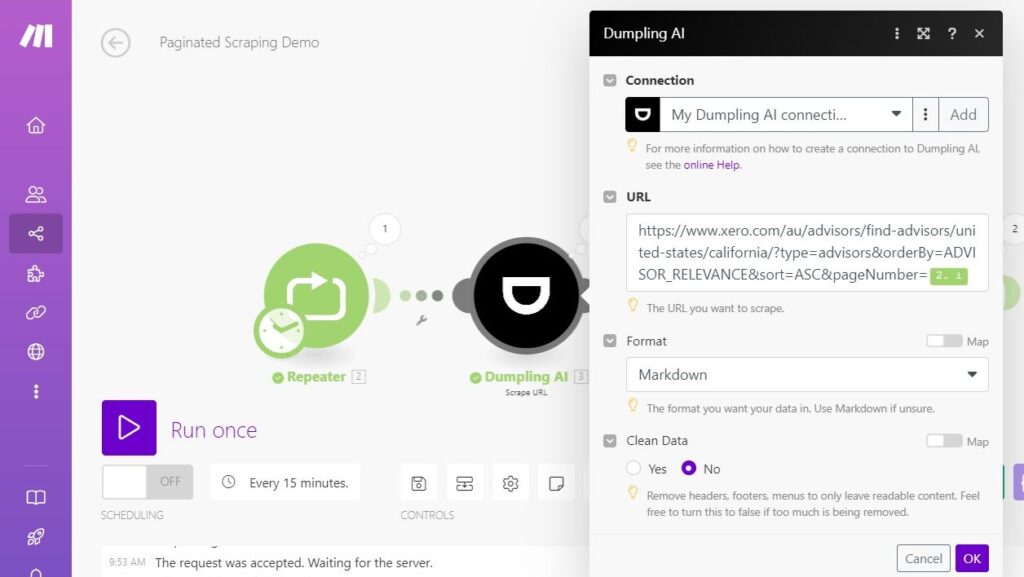

2. Scraping Data with Dumpling AI

Next, let’s add the Dumpling AI scraping module to extract content from each page.If this is your first time using Dumpling AI, you’ll need to connect your account. If you don’t have an account yet, click here to sign up and claim your 100 free credits to start scraping for free!

- Add the Dumpling AI Scraper Module to the scenario after the repeater.

- Configure the URL: Insert the URL of the website you want to scrape, making sure to use a dynamic page number from the repeater module as a placeholder. For example:

The {{2.i}} is a reference to the repeater’s current iteration, representing the page number.

Choose the format for your scraped data. You can pick between Markdown, HTML, or screenshot—in this case, we’ll use Markdown.

To get all the details from the page, ensure to keep clean data as No.

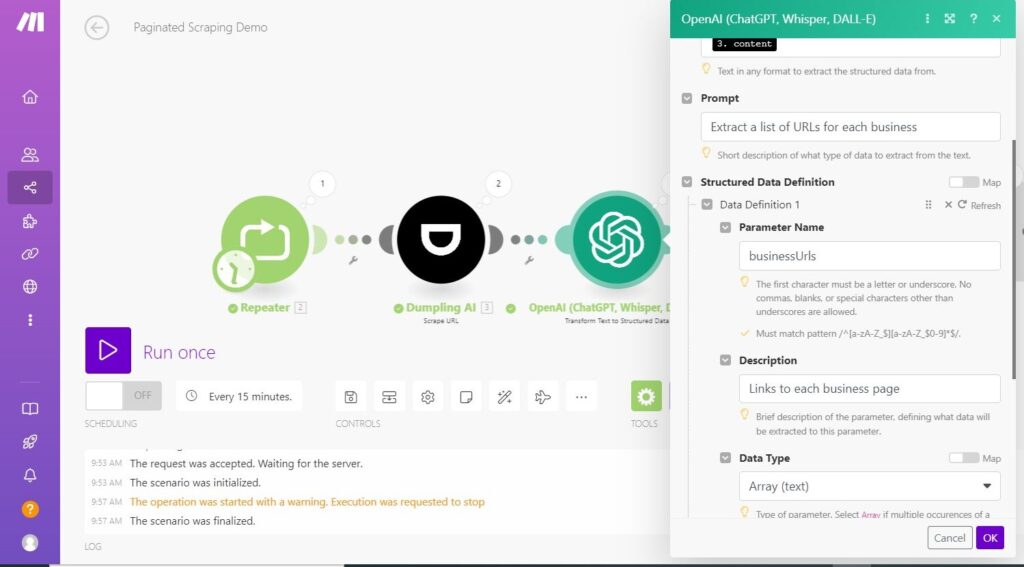

3. Use ChatGPT to Extract Key Information

In this workflow, once the data is scraped, instead of using the “Create a Completion” module followed by the “Parse JSON” module, we streamline the process by utilizing the ChatGPT Text to Structured Data module. This approach combines the functionality of both modules into one, making the process more efficient.

- Add the OpenAI GPT Module: After the scraper, add a module to process the scraped data. Set up a prompt for GPT to extract URLs or other important data. Here’s an example prompt:

“Extract a list of URLs for each business from the following text”.

Set the Input Data: Map the raw scraped content from the previous step (scraper module) into the prompt input field. The placeholder {{3.content}} refers to the scraped content from step 3 (Dumpling AI).

“Depending on the complexity of what is being scraped you can choose a model. For our example, we use GPT-4o-mini”.

3. Defining the Output (Structured Data)

This step will define how the data will be extracted and returned.

- Configure Output Parameters: The OpenAI GPT module will allow you to define what kind of structured data you want to receive.

- Add a Parameter called businessUrls. Set it as an array of strings because you’ll be extracting multiple URLs from the text.

- You can also define more parameters depending on the data you want to extract (e.g., business names, addresses).

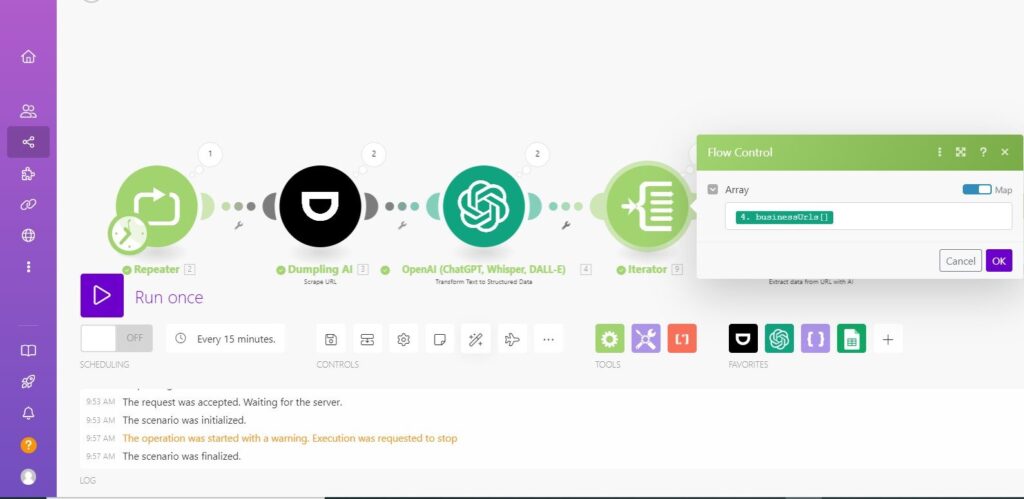

4. Feeding the Extracted Data

Next, use the Iterator to feed the extracted URLs from the previous step into the next action.

- Add Iterator: This will loop through the extracted URLs to further process each of them.

- Map the URL Field: Map the businessUrls extracted from GPT to be used in the next scraping module.

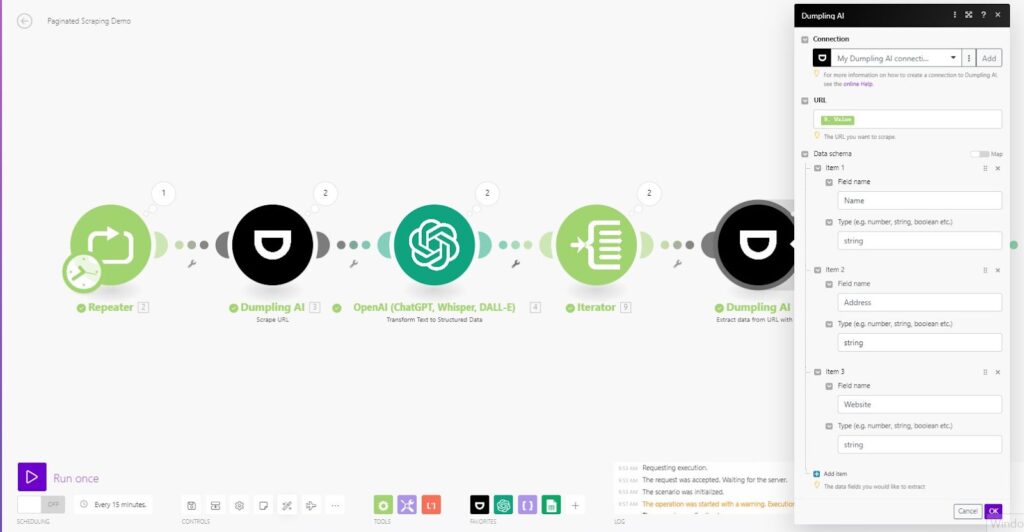

5. Scraping Each Business’s Page

Now that you have a list of URLs, let’s scrape data from each individual business page.

- Add Another Dumpling AI Scraper(Extract data from URL with AI module): This will scrape details like business name, address, and website from each URL.

- Define the Schema: Set up the schema to extract specific fields like:

- Name

- Address

- Website

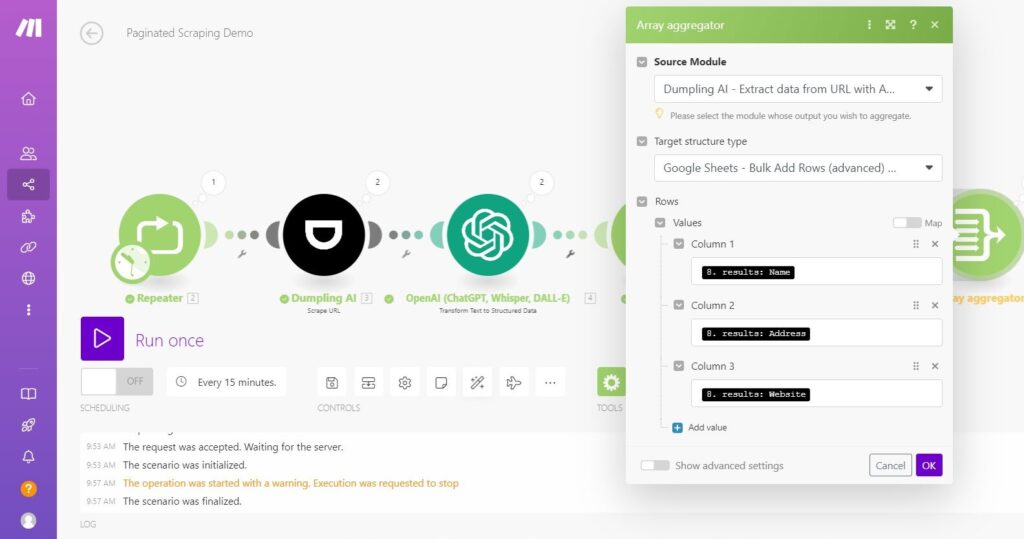

6. Aggregating the Data

To collect the data retrieved by Dumpling AI, you’ll need to add the Array Aggregator module. This module allows you to aggregate all the data you want to store in Google Sheets.

The Result from Dumpling AI contains the data we want, such as the website, Name, and other details. Since this data is grouped in a collection, you need to parse it correctly to send it to Google Sheets.

Here’s how to configure it:

- Set the Feeder: In the Array Aggregator module, set the feeder parameter to the previous module, which is the Dumpling AI Extract data from URL with AI module.

- Select the Target Structure Type: Under the Target Structure Type, select “Rows”. This tells the aggregator that the data will be parsed and sent to the Google Sheet as individual rows.

- Map the Variables: Next, map the specific variables from Dumpling AI (such as website, name, etc.) that you want to transfer onto the spreadsheet. This ensures that each piece of data is sent to the correct column in Google Sheets.

- Define Values: In the values field, input the fields you wish to extract from the Business. For this tutorial, we are mapping:

- Name

- Address

- Website

This setup will allow the automation to correctly parse and store all relevant data from Dumpling AI directly into the Google Sheet.

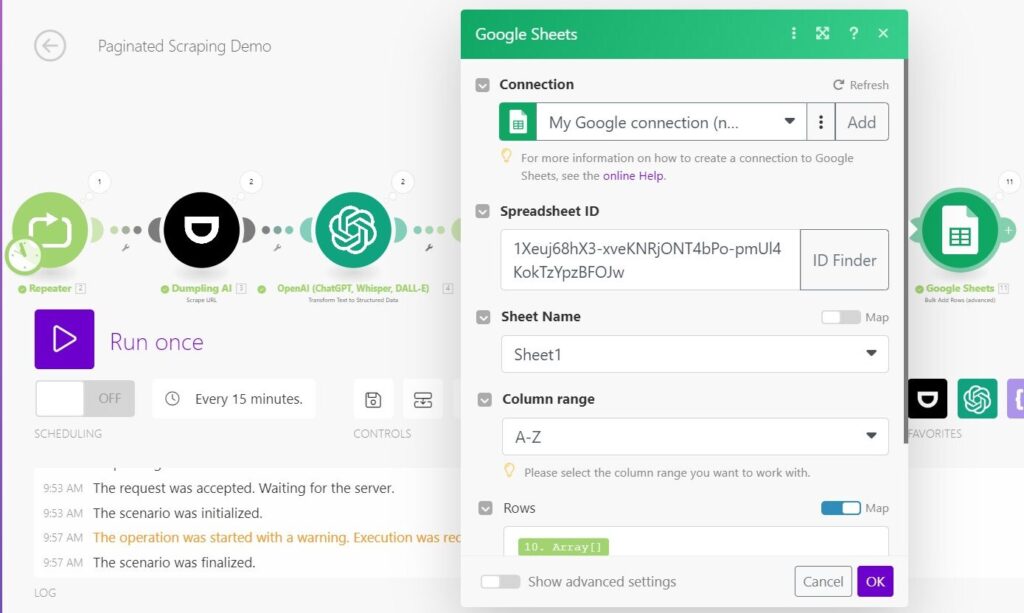

7. Saving Data to Google Sheets

Add the Google Sheets Module

- Next, add the Google Sheets – Add Multiple Rows module to your scenario.

- Connect your Google account if you haven’t already. Once connected, select the specific Google Sheets document and the sheet where the data should be stored.

- Configure the following parameters:

- Spreadsheet ID: Input the ID of the spreadsheet where you want the data to be stored.

- Sheet Name: Select the name of the sheet, e.g., Sheet1.

- Rows: Map the aggregated values (from the array aggregator) to the rows in your Google Sheet.

Testing and Running the Scenario

Test the Scenario

- Before running the automation, test the scenario by clicking on the “Run Once” button. This will help you verify that the data is being scraped correctly and stored in Google Sheets.

- Check the Google Sheets file to confirm that the correct data is appearing in the expected columns.

Activate the Scenario

- Once you’ve confirmed that everything works as expected, turn on the scenario by clicking on the “Activate” button. Your automation will now run based on the triggers you set.

Conclusion

With Dumpling AI, scraping paginated data becomes a seamless, efficient process. By following these steps, you’ll be able to gather valuable information from multiple pages in no time. Whether you’re extracting data for business insights or automating repetitive tasks, this setup will save you countless hours. Don’t forget to take advantage of the 250 free credits if you’re new to Dumpling AI, and start your scraping journey today.

Get the Blueprint Featured in This Guide

Access the full blueprint here to get started on setting up this automation effortlessly!